Index Copernicus is a Poland-based company that offers several services related to scholarly publishing. One of their products is a journal rating system, and I am concerned about the methods they use for ranking journals and the high proportion of predatory journals in their database.

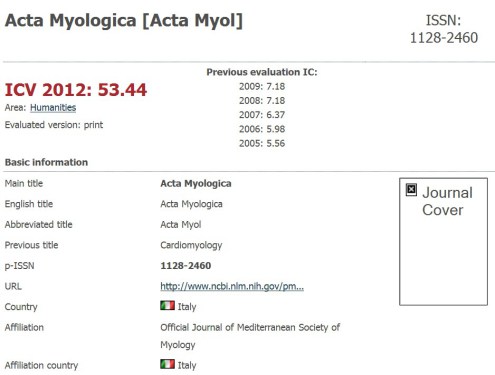

Looking at the IC Journals Master List 2012 one sees the beginning of the list sorted by “ICV” or Index Copernicus Value. The list is sorted with the highest-ranking journals at the top. The second highest scoring journal is Acta Myologica, with a score of 53.44. This journal beat out Science, Nature, and many other top science journals.

Looking at the information page for this journal at the Index Copernicuswebsite, one sees many errors. First, there is a dead image link. The journal deals with myology, the study of muscles, but is misclassified by IC as a humanities journal.

The page shows the journal’s earlier scores from 2005-2009, increasing from 5.56 to 7.18. (I have observed that Index Copernicus values always go up, never down). Missing are the values for 2010-2011. The 2012 value is an astounding 53.44, and there’s no explanation why the value has increased so much. Also, the page mislabels Acta Myologica as an English Title (it’s really Latin).

Numerous predatory journals have IC values, and they often display their IC value prominently on their websites. They do this because they want to attract article submissions, and the article processing charges they bring in. The value may look like an authentic metric to potential authors. It bears a resemblance to the impact factor, so naïve authors may accept it as such.

The IC Evaluation Methodology is here. It seems heavily weighted towards publishing practice rather than scientific impact.

I find the Index Copernicus value (IC Value) highly questionable. In fact, I’d say it’s a pretty worthless measure, especially given how many predatory publishers have established values, values that increase every year.